This event was the eighth of the AI3SD Autumn Seminar Series that was run from October 2021 to December 2021. This seminar was hosted online via a zoom webinar and the theme for this seminar was Molecules, Graphs & Networks, and consisted of two talks on the subject. Below are the videos of the talk and speaker biographies. The full playlist of this seminar can be found here.

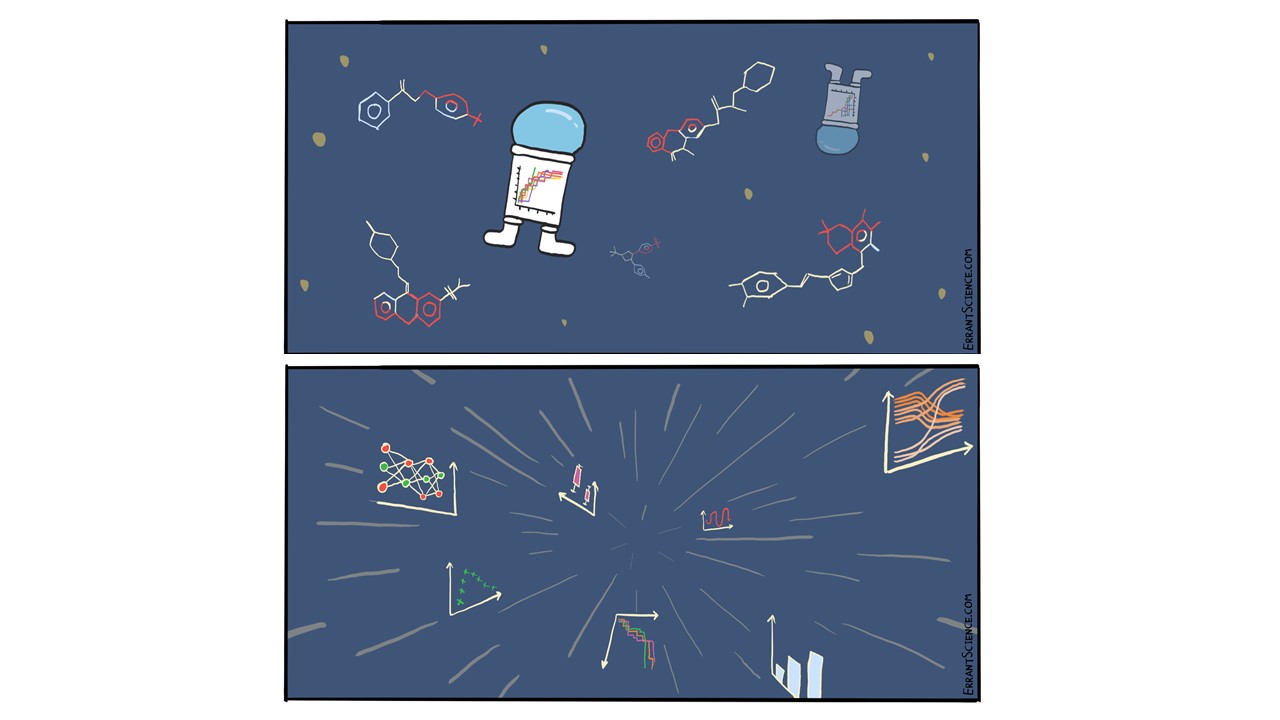

Chemical Space Exploration – Jan Jensen

Jan H. Jensen obtained his PhD in theoretical chemistry in 1995 from Iowa State University working with Mark Gordon, where he continued as a postdoc until he joined the faculty at theUniversity of Iowa in 1997. In 2006 he moved to the University of Copenhagen, where he is now professor of computational chemistry.

Q & A

Q1. Did you have any problems with maintaining diversity in your populations? And how did you deal with it?

Yes, we do. Each individual genetic algorithm search tends to lose diversity a lot. You’re zeroing in on one particular target, but if you run different genetic algorithm searches, it zeroes in on another molecule. So, if you combine the final populations of several runs, then you have fairly good diversity and so the question is now, are there ways to increase or force diversity? Basically, by changing the including diversity in the scoring function. The question is, is it better to run fewer genetic algorithm searches, but with this diversity parameter? Or is it better to run many genetic algorithm searches without the diversity criterion and then combining the final populations. So that’s one of the things we have to look at. If you only can afford to run one genetic algorithm search, then yes, you need to somehow ensure that genetic population is diverse, so that can be done, just by insisting that they have, for example, molecular weight, different molecular weights, or different properties that are fast to compute.

Q2. In GA, how do you determine the population size?

That’s trial and error and also depends on how expensive your scoring function is. So, if you’re scoring function is very expensive, you typically have to keep your population size low and also the number of generations low and just hope for the best. So basically, it’s an empirical parameter. You can help ensure that small populations work by doing some pre-screening. So instead of justice randomly populating your initial population, you could do some pre-screening of the property or interest to them and then only include high scoring molecules that are available or known in your initial population. And then you could probably get away with a smaller one, but there’s a lot of empiricism in these genetic algorithm runs.

Q3. How often do the TS’s found by xTB give more than 1 negative frequencies with DFT?

So, we haven’t kept track of that, how often does the transition state search succeeds? Usually when it doesn’t succeed it’s often because you have two or more imaginary frequencies. We haven’t really addressed that problem specifically, but usually the success rate is fairly good – better than 50%. Basically, it depends on how much time and effort you want to spend on finding that transition state. So, if it looks like it has a very good score at the semi empirical level, there are many things you can try to get your transition state search to work. But if you just do a first pass and give it 50 molecules, then maybe 25 will work at the first shot, and then the rest you have to go in and deal with a manually. That is by far where we spend most of our computational efforts. It’s not really in the genetic algorithm, it’s doing the DFT refinement for the final population. We’ve actually gone in now, and also with the catalyst search, we also have a synthetic accessibility measure in there. Before we start looking at transition states we really do as much sort of synthetic accessibility analysis, so if you can’t make the molecule anyway, there’s no point in trying to find a transition state. But if everything lines up, you have a molecule, at the semi empirical level, that’s very promising. It looks like it’s easy to make then we would spend a lot of effort trying to find the DFT year transition states to verify it.

Q4. Are there any methods which you applied to your GA to accelerate the evolution?

No. So far, a straight ‘plain vanilla’ genetic algorithm has worked. The one thing we have messed around with a bit is the selection criteria. So, when I say we pick according to score, there’s actually many ways of doing that. There’s where the probability is directly proportional to the score. You can also just rank them, and then pick according to the rank in the population and things like that. And for some scoring functions that has some effect. But some of the other accelerants that’s out there, we haven’t really had to use it yet because when we run this sort of very simple genetic algorithm code, we get enough promising candidates to try to refine that the DFT level, and that’s where we end up spending most of our time, not sort of generating more things to try right, but actually trying them.

Q5. For the total chemical space of 10^60, does that include molecules of all size? About what is the chemical space size for reasonable drug sized molecules (with say less than 50 carbons)?

This is for that kind of space, this estimate was basically made for drug-like molecules, so these are small molecule organic with not too many different atoms, so there’s no high molecular weight in there and the number is not large because you have high molecular weights or because you have strange atoms like silicon or boron, these are just plain organic molecule. But there are so many of them because there are so many different combinations of functional groups that can be combined in so many different ways. So, personally if it’s just possible molecules, I actually believe the spaces is even bigger. It’s not clear what percentage would be judged synthetically accessible? That’s the question, and that’s why you often see lower estimates of this space. I think that’s actually when people start trying to estimate the percentage of synthetically accessible molecules. But of course, that will change as the synthetic machinery gets better.

Q6. Do you use xTB’s optimizer or ORCA’s optimizer?

xTB. I think the xTB optimizer is fine, but the real issue here is the speed. And by speed I also mean the set-up getting the program loaded into memory – getting it started. If you’re doing thousands of very fast xTB calculations, these things start to matter and then these big quantum programs that are huge, and you’re loading a lot of stuff into memory that you actually don’t need for the xTB calculations, those things that are not really time consuming, but they are if you’re doing them, thousands of times.

Q7. I missed how he got the SA values, mind showing it again?

there will be a reference in this paper (Steinmann, Casper, and Jan H. Jensen. 2021. “Using a Genetic Algorithm to Find Molecules with Good Docking Scores.” Peer J Physical Chemistry 3 (May): e18.). But it’s basically just an analysis of molecules that have been made, that you can be made, but fragments are in there, and if you have a high percentage of those fragments in your molecule, then it’ll judge it to be synthetically accessible. But it’s an old method, at least 10 years old or something like that. And the reference will be in here (Ertl P, Schuffenhauer A. 2009. Estimation of synthetic accessibility score of drug-like molecules based on molecular complexity and fragment contributions. Journal of Cheminformatics1(1):8.).

Hyperparameter Optimisation for Graph Neural Networks – James Yuan

Yingfang (James) Yuan is a PhD candidate working under the supervision of Dr Wei Pang, Prof. Mike Chantler and Prof. George M. Coghill (external) in the School of Mathematical and Computer Sciences at Heriot-Watt University. Yingfang received his MSc in Big Data and High-Performance Computing at University of Liverpool. His research interests include Machine Learning; Deep Learning (especially in Graph Neural Networks and AutoML); He particularly focused on investigating the impact of hyperparameter optimization on graph neural networks applied to predict molecular properties and the efficiency of hyperparameter optimization approaches.

Q & A

Q1: What got you interested in this topic for your PhD?

Things related to our project goal, so I think it’s motivated by our EPSRC project which aims to find and develop self-healing materials. We play the computer science role in that group and we build the models to help our colleagues to facilitate their work in materials science, for example, improving experimental strategy. Meanwhile, molecular property prediction is significant task for many research problems, so it means our work could be applied to a wider range of problems.

Q2: Are these able to be applied to other ML networks? How hard are they to implement? Where can I learn to implement them?

I think the core of today’s topic includes GNN and Hyperparameter Optimisation. Hyperparameter Optimisation is a quite wide and general topic for most machine learning methods this is not just for the graph neural networks. And I think it’s not hard to implement because there are just two parts of work, so you just need to combine them in an iterative way, the model you want to use and a hyperparameter optimisation you select. Probably you need to wait for a relatively long time because hyperparameter tuning is costly. In addition, I think you can find there are quite a lot of python libraries like Optuna and Hyperopt, which provide ready-to-use functions and classes to do Hyperparameter Optimisations.

Q3: Did you initialise the weights from new each iteration?

This is a good question. In our research, we initialise the weights in each new iteration, one iteration denotes a process of searching and evaluating a hyperparameter solution. However, it is possible to fix the weights or partial weights for the next hyperparameter solutions, which is more common in neural architecture search.

Q4: Apart from Optuna, what sort of open python packages are there to use?

Apart from Optuna, do you mean the python packages for GNN or maybe python packages for the Hyperparameter Optimisations? I think Optuna is for Hyperparameter Optimisation. If you want to implement graphing works, you can use TensorFlow, PyTorch and Deep Graph Library. Deepchem is more for deep learning in science