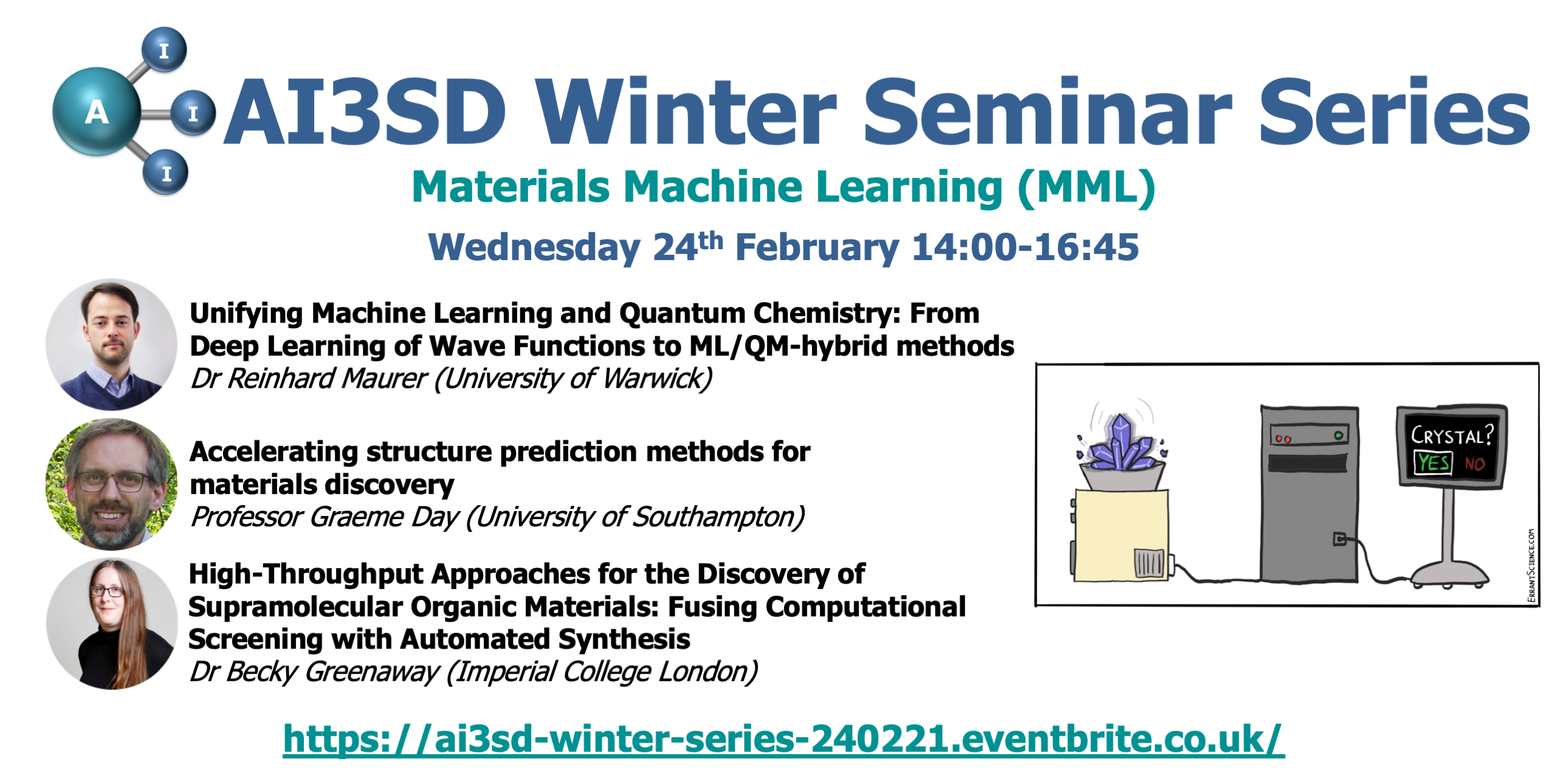

This seminar was the seventh of ten in our AI3SD Winter Seminar Series. This seminar was hosted online via a zoom webinar. The event was themed around Materials Machine Learning (MML), and consisted of three talks on this subject. Below are the videos of the first two talks with speaker biographies, and the full playlist of this seminar can be found here.

Unifying Machine Learning and Quantum Chemistry: From Deep Learning of Wave Functions to ML/QM-hybrid methods – Dr Reinhard Maurer (University of Warwick)

Reinhards research focuses on the theory and simulation of molecular reactions on surfaces and in materials. Reinhard studies the structure, composition, and reactivity of molecules interacting with solid surfaces. Reinhards goal is to find a detailed understanding of the explicit molecular-level dynamics of molecular reactions as they appear in catalysis, photochemistry, and nanotechnology. Members of Reinhards research group develop and use electronic structure theory, quantum chemistry, molecular dynamics, and machine learning methods to achieve this.

Q & A Session

Q1 Would you still recommend using a machine learning model for orbitals instead of observables if using a coupled cluster method like CCSDT?

I think so, it depends on what you want to do. If you’re interested in only that observable and nothing else, then obviously train a model on that observable, everything else would be ridiculous. But, if you’re interested in getting a parametrisation that gives you access to derived quantities, (for example electronic states as a function of coordinates), potentially even states that don’t correspond to the ground state, so, where you can look at time dependent properties, then that’s probably not the best approach. You can think of all of what I’ve talked about as being about effective single particle theories, so Kohn-Sham or Hartree-Fock hamiltonians but, you can show that any many body correlated theory can be mapped onto an effective quasiparticle approach, so we can define effective representations of Hamiltonians for these theories and then use those as well

Q2 Could you please explain a bit more about the difference between restart Newton and restart? You mentioned this when you describe in using a machine learning model to reduce the number of SCF iterations. With this idea, work for CCSDT?

First of all, the difference between restart, and restart Newton. So, restart is just you take the default algorithm that most quantum chemistry codes will give you if they assume you do a standard SCF calculation and that will typically be a diagonalization algorithm, that is quite robust and conservative. Something based on iterative inversion of subspace or some type of density mixing. For example, if you have a very good guess and you’re in the quadratic convergence region of the SCF, you can move over to a Quasi-Newton algorithm, which is going to have exponential convergence. What we see is if you have a good guess and a very robust algorithm, you’re not making a lot of ground in terms of providing this better guess. If you use a Quasi-Newton algorithm, then you can actually see the benefit. and that Starting SCF from a random guess with a Quasi-Newton algorithm would never converge.

The second part of the question was about if thiswould work for CCSDT? The problem is a bit different. The iterative equations you have to solve for CCSDT are slightly different, but I don’t see why it wouldn’t work. There’s a great paper out (https://pubs.acs.org/doi/10.1021/acs.jpclett.9b01442) which predicts CC amplitudes which is then used to accelerate iterative convergence of Coupled Cluster equations. But, there’s lots of different ideas in this space where machine learning models don’t necessarily need to replace quantum chemistry, but they can support and facilitate quantum chemistry.

Q3 Could you please say a bit more about the problem with bigger molecules?

The problem with bigger molecules, are bigger matrices, basically. The problem isn’t necessarily that if we analyse the errors we get on our matrices, on the other Hamiltonian basis representations, it isn’t necessarily that the error on each of every element of the matrix is getting worse. That error is actually pretty constant or goes down, but, because we have a large matrix and we’re diagonalizing the matrix, we are forming quantities that are linear combinations of lots of different elements with small errors on them. For larger matrices, we’re compounding these errors when we calculate eigenvalues. So, it’s a problem that doesn’t scale well, that we need to figure out better ways of how to eliminate this issue. So one way is to reduce the dimensionality and the pre-factor in the scaling, by using a compact minimal basis that we can optimize, and so we are currently still working on figuring out what are the best possible orbital localization methods we can use in this context. The other problem is that we need to figure out how do we optimize our loss function, so we can tailor the error more efficiently for these larger systems.

Q4 The eigenvalues analytic function of the matrix elements. Can you not incorporate the eigen values into the training?

Yes, you are right, they are analytical functions, but they’re not smooth functions. So, they’re not smooth functions of coordinate space and the problem are discontinuities. It’s much more difficult to learn eigenvalues. The first thing we tried was just a brute force approach of learning eigenvalues and densities of states and that epically failed because you’re asking the machine level to learn how a diagonalization works on top of learning the coordinate dependence of the eigenvalues. So, you can think of the whole thing that I presented as just finding an intermediate representation that generates much smoother functions that are easier to learn. So, all of the integrals that are part of the Hamiltonian and are much, much smoother and much easier to learn than learning the eigenvalues themselves. At least, that’s our experience for the systems we’ve looked at. One point we do actually incorporate is the diagonalization into the training, it’s just not part of the neural network because, for example, in the loss function, we built the loss functions onto energy eigenvalues. So, we need to back propagate through the diagonalization.

Q5 What is the convergence rate of the iterative inversion method? I mean the other method from the quasi newton method, that works when the initial guess is not accurate? I was just curious about the two different methods you used for diagonalization?

One is a standard mixing algorithm, the other one is a second order solver

Accelerating structure prediction methods for materials discovery: Professor Graeme Day (University of Southampton)

Graeme Day is Professor of Chemical Modelling at the University of Southampton. His research concerns the development of computational methods for modelling the organic molecular solid state. A key focus of this work is the prediction of crystal structures from first principles; his research group applies these methods in a range of applications, including pharmaceutical solid form screening, NMR crystallography and computer-guided discovery of functional materials. After a PhD in computational chemistry at University College London, he spent 10 years at the University of Cambridge, where he held a Royal Society University Research Fellowship working mainly on modelling pharmaceutical materials and computational interpretation of terahertz spectroscopy. He moved to the University of Southampton in 2012, at which time he was awarded a European Research Council Starting Grant for the ‘Accelerated design and discovery of novel molecular materials via global lattice energy minimisation’ (ANGLE). This grant shifted the focus of his research to functional materials, including porous crystals and organic electronics. In 2020, he was awarded an ERC Synergy grant ‘Autonomous Discovery of Advanced Materials’ (ADAM) with Andrew Cooper (Liverpool) and Kerstin Thurow (Rostock) to integrate computational predictions, chemical space exploration with automation in the materials discovery lab. Graeme has served on the editorial boards of CrystEngComm, Faraday Discussions and on the advisory board of Molecular Systems Design & Engineering (MSDE).

Q & A Session

Q1: For small organic molecules, is the structure in a crystal the same as the structure in water? If not, how do you go from the structure in a crystal to the structure in water?

So, I guess what you’re talking about there is the molecular structure itself. That’s a good question, because all of the molecules that I showed you were chosen deliberately for these initial studies to be quite rigid molecules. This was so that the molecular structure would be very similar in the crystal to the single molecule calculations that we’ve done which are in vacuum, and we would assume also then similar in the solvent. That’s not the case as you get to more complex molecules. One of the big applications of crystal structure prediction is in pharmaceuticals where we’re looking for understanding whether there are more polymorphs of this molecule out there which would potentially have different properties. Pharmaceutical molecules are generally much more flexible, and we know that the conformation of the molecule in the crystal can be very different to what it is in the solvent and in the gas phase. To take account of that, what we have to do is to include intramolecular degrees of freedom, such rotatable bonds, at the very initial stage of generating trial crystal structures, at the same time that we sample the crystal packing degrees of freedom. Then, also at the energy minimization stage we will have to allow the molecular geometry to relax within each crystal structure because we know for these flexible molecules that the environment in the crystal will influence the molecular structure. So we have to account for this at two stages of the structure prediction process.

Q2: Why is it OK to ignore entropy?

We do worry about entropy because typically crystallisation is performed at room temperature so the dynamics of the molecules within the crystals are important. We’ve done some studies where we looked at the phonon density of states – the vibrational density of states – of different crystal structures of the same molecule. These might be observed polymorphs, or different predicted crystal structures of a molecule. From the vibrational density of states, we then evaluated entropy differences between known polymorphs and within predicted landscapes. If you go back to an earlier slide from my talk where I gave a reference for polymorph lattice energy differences, the main point of the study in that paper was to look at the lattice dynamical contributions to the free energy differences. What we found was that the typical entropy contributions to free energy differences if you then calculate them at room temperature are about 1/3 of the typical differences in the static lattice energies. So, these contributions will re-rank structures, but will be less important than the static energy differences. If you want to be very accurate then we know that we have to include the entropy and that might be an area for future work: to try to speed that up through machine learning as well, to predict these vibrational densities and states. But that’s why we, in a first approximation, can ignore entropy differences because we know their magnitudes are typically smaller than the static energy differences.

J. Nyman, G. M. Day, CrystEngComm, 2015,17, 5154-5165

J. Nyman, G. M. Day, Phys. Chem. Chem. Phys., 2016,18, 31132-31143

Q3: Why are there hundreds of different crystal structures named for one molecule? One of your slides showed Oxalic Acid has 476 crystal structures. I thought there would be one crystal structure for one molecule.

When I did crystallization as an undergraduate, we learned that there is one crystal structure for a given molecule. We now know a lot more about the occurrence of polymorphism where molecules, experimentally, have multiple crystal structures. So oxalic acid, as an example, has two experimentally observed crystal structures. We know some molecules out there that have a dozen or more known crystal structures for the same molecule These crystals are chemically identical but there are just differences in the packing of molecules. Now I’m showing you 476 crystal structures for oxalic acid, because those are all the local minima on the lattice energy surface for that molecule within an energy range we have chosen, which in this case was 20 kJ per molecule. So, for that molecule there are two known structures, but in the energy range just above the global energy minimum, there are nearly 500 predicted crystal structures. That’s the game: how do we distinguish those two structures in this set that will be observed, from the 474 that are unobserved crystal structures for that molecule. With the force field, we don’t do very well. One of those structures which is observed is lowest in energy of the predicted structures, but the other one is way up in the cloud of all these hundreds of others. When we correct things with the higher-level calculations, then the second observed structure also comes down and so the two observed structures are the two lowest in energy. The hundreds of structure that I’m showing or all of those that are predicted and come out of the computer, and that’s a big thing in crystal structure prediction of small molecules. We over-predict polymorphism. We always predict huge numbers of structures, many more than we ever see experimentally.

Q4: How many atoms were in the hybrid functional DFT calculation that would have taken 101 days without parallelization?

That 101 days would have been the total computational time that it took to do all of the crystal structures. So, that was for all 476 predicted crystal structures of oxalic acid. Those structures would have ranged in size. One oxalic acid molecule has eight atoms. A typical crystal structure has between 2 and 8 molecules in the unit cell. So, for that small molecule most of the crystal structure would have between 16 and 64 atoms in the unit cell. Something I didn’t mention is that you can be smart about how you choose your training set by only performing those highest-level calculations on structures which have the smaller unit cells, as long as they’re giving you enough diversity in the information that you get for training your Gaussian process.

Q5: When you fit the difference between levels of description, do you treat intra and intermolecular degrees of freedom differently?

That’s not something I covered in the talk because in this work we dealt with rigid molecules, and all of the energy corrections that we were applying were single point corrections. So, the force field calculations were done with a rigid molecule approach and so the intramolecular energy for every crystal structure is the same. All we’re learning are intermolecular energy differences. We’re doing follow up work to look at flexible molecules where we have differences in intramolecular energy as well. That’s not something that we have completed. We do know from work we’ve done several years back that we can use Gaussian processing to learn the intramolecular energy surface as well, but whether the same model will learn both together or we need to build separate models, we don’t know yet.

Q6: How do you treat dispersion in your high-level electronic structure calculations?

In our all of the work we used an empirical dispersion correction from Grimme which looks like a force field term. We have looked at other things, like many body dispersion models, as well because we know that that these can sometimes help distinguish structures.

Q7: Could this be applied to crystals with inorganic atoms in them, like metal organic frameworks?

Possibly. I don’t see why it couldn’t be applied. The way that we generate crystal structures is different if you have a network solid like a metal organic framework. You don’t have distinct units like you have molecules in molecular crystals because everything is connected through those metal centres in that example. However, once you’ve generated structures, then similar types of approaches could probably be used to then learn corrections to the calculated energies. This is a little bit of guesswork, as I haven’t done it, but I don’t see why it wouldn’t work. The interactions are different, and so things about the descriptors may need to be different, but I think the overall approach should probably be successful.

Q8: What are the problems when the molecular conformation space is large in addition to just a large space to sample?

When the conformational space is large then really, we just have a higher dimensional surface. So, we know for some drug molecules you may end up with hundreds of molecular conformations. If you’re going to sample those degrees of freedom at the same time as the crystal packing degrees of freedom, then your sampling space is much larger, and as your number of degrees of freedom go up then your sampling becomes more difficult. You need to generate more structures and you may need to be smarter in the way that you generate trial crystal structures. Some of it’s an open question of the best way to do things, but essentially, it’s just higher dimensions of the energy surface that we’re sampling. Also we’re then talking about conformations versus intermolecular interactions in different possible crystal structures. The scales of inter and intramolecular energy differences can be quite different, and that can cause additional problems as well. Rather than going on for another half hour talk on this topic, I’ll stop with that short answer.